At GE HealthCare, responsible AI and sustainability aren’t merely corporate buzzwords: they are fundamental principles woven into the fabric of our AI innovations. With over 125 years of experience developing medical devices and an installed base exceeding 5 million units worldwide, we understand that the trust of healthcare providers and patients comes with profound responsibility. Our technologies support more than 1 billion patients annually, generating critical data that drives life-saving decisions.

Our seven Responsible AI Principles (Safety, Validity and Reliability, Security and Resiliency, Accountability and Transparency, Explainability and Interpretability, Privacy, and Fairness with Harmful Bias Managed) guide every stage of our product development.

Our commitment to sustainability is crucial in an era where AI systems consume increasing amounts of global energy resources. We’re dedicated to developing AI systems in a sustainable manner that embraces opportunities for socially conscious use.

In this article, we’ll explore how GE HealthCare has fundamentally pioneered new techniques for image reconstruction that train highly efficient neural networks, transforming what was once computationally impossible into practical, energy-efficient solutions that benefit both patients and our planet.

Understanding image reconstruction

Before diving into our innovative approaches, it’s essential to understand what image reconstruction means in the context of medical imaging. For example, when a patient undergoes a Computed Tomography (CT) scan, the machine doesn’t directly capture the cross-sectional images we see in medical reports. Instead, it measures billions of sensor data samples corresponding to thousands of X-ray projections from different angles as the scanner rotates around the patient. The process of transforming these raw measurements into the detailed anatomical images that physicians use for diagnosis is called tomographic reconstruction.

Think of it this way: imagine trying to understand the internal structure of a complex object by only looking at its shadows from different angles. Each shadow provides limited information, but by combining hundreds or thousands of these shadows mathematically, we can reconstruct the object’s internal structure. This is essentially what tomographic reconstruction accomplishes.

Historically, CT reconstruction has relied on two primary approaches. The first, analytical reconstruction, uses mathematical inverse transforms to convert projection data into images. This method is fast and produces good results for complete, high-quality datasets. For a typical CT image made up of N×N pixels (for example, 512×512 pixels), the computational work required grows as the cube of N (O(N³)). This means if you double the image resolution, the computation time increases eightfold. This might seem like a lot, but for standard clinical imaging, this remains manageable with modern computers. The second approach, iterative reconstruction, uses a forward model (essentially a simulator of the CT scanner) to iteratively refine the image. Starting with an initial guess, the algorithm repeatedly projects the current image estimate, compares it with actual measurements, and adjusts the image to better match the data. While this method can handle challenging scenarios like noisy or incomplete data, it’s computationally expensive, requiring numerous iterations of forward and back projections.

The dimensionality challenge

Consider a typical 2D CT scan: the raw data (called a sinogram) contains measurements from detector channels (the individual X-ray sensors arranged in an arc around the patient) and projection views (the measurements taken at each angle as the scanner rotates). For example, with 888 detector channels × 984 projection views, we have 888 sensors taking measurements at 984 different rotation angles. The output image is 512 × 512 pixels. To directly connect input to output, we would need a system matrix of approximately 229 billion parameters (calculated as 888 × 984 × 512 × 512, which equals roughly 229 billion connections between input and output). This is just for 2D; modern clinical CT is performed in 3D, where the problem becomes orders of magnitude more complex.

The computational requirements make it near-impossible to train a neural network with this many connections. A fully connected neural network of this scale cannot be implemented even with today’s most advanced hardware. In 3D, the problem becomes orders of magnitude harder, making a brute-force approach infeasible.

Tomography and line integrals

In CT scanning, each measurement represents a line integral: the total X-ray attenuation along a straight path through the patient’s body. X-ray attenuation refers to how much the X-ray beam weakens as it passes through different tissues: dense materials like bone block more X-rays (high attenuation), while soft tissues and air block fewer X-rays (low attenuation). The sinogram, our raw data, is a collection of these line integrals from different angles and positions.

A voxel (think of it as a tiny 3D cube that represents a small piece of tissue in the body, just as a pixel is a tiny square in a 2D photo) is influenced by many line integrals passing through it. Conversely, each line integral is affected by many voxels along its path. This creates what mathematicians call a “convolutional problem”: every measurement touches many unknowns, and every unknown is defined by many measurements. The reconstruction challenge is to untangle this complex web of relationships to determine the actual density values of each voxel.

GE HealthCare’s novel approach: Learning from human vision

The breakthrough insight came from an unexpected source: human depth perception. Consider how human vision works: with only one eye, we lack depth information and see only a flat projection. However, by moving slightly to the side or using both eyes, we gain partial depth information. Moving further around an object provides progressively more depth information, and a complete rotation gives full three-dimensional understanding.

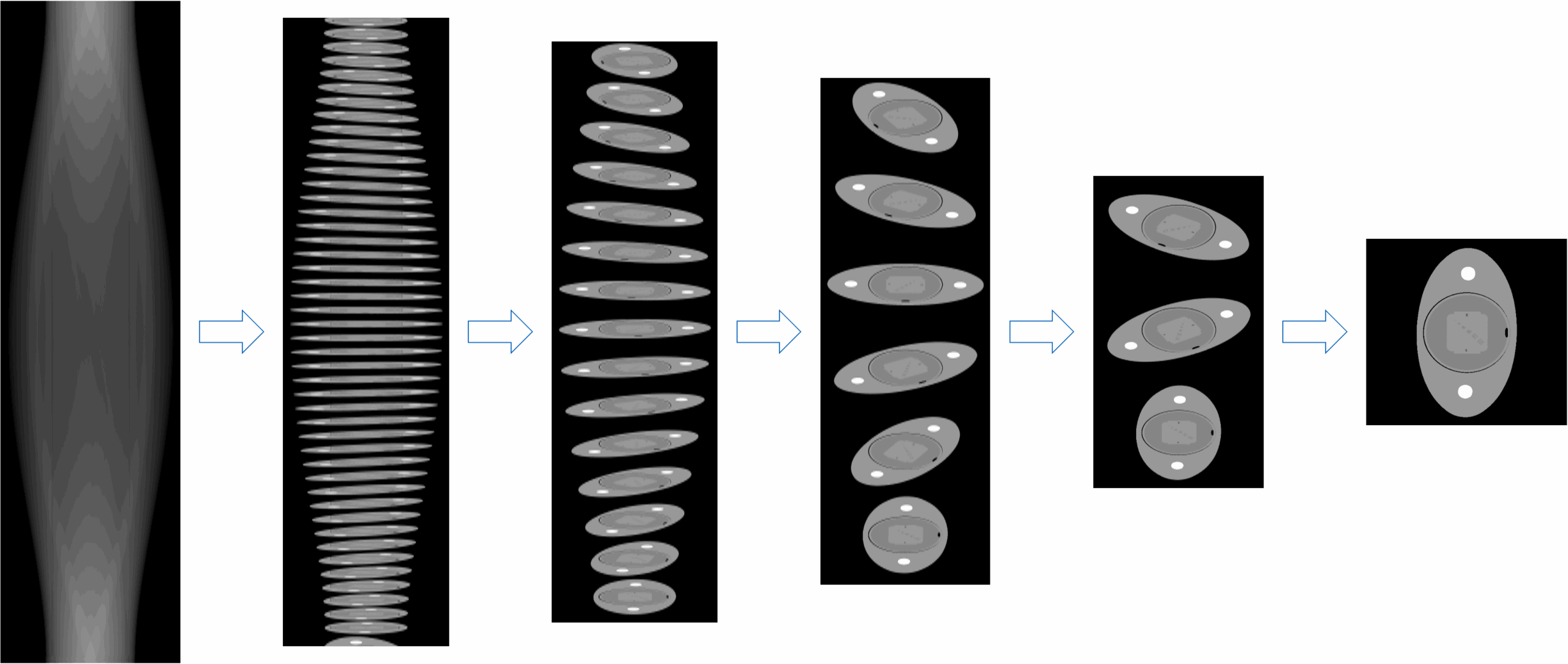

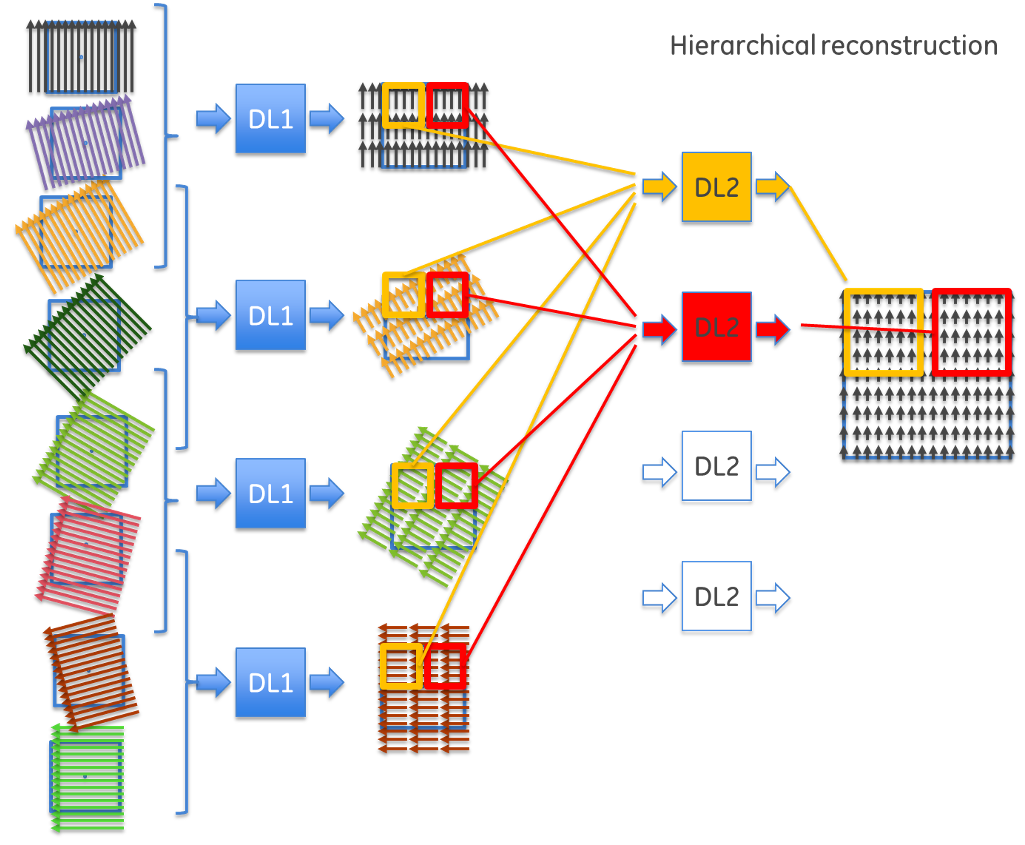

This observation led to a hierarchical approach to reconstruction. Instead of trying to solve the entire problem at once, the team developed a method that takes projections from nearby angles, combines them to extract partial depth information, then gradually builds up the complete image through multiple stages.

This insight resulted in the development of a revolutionary network architecture that transforms the reconstruction problem through hierarchical decomposition. Rather than using one massive neural network with billions of connections, this approach creates a network of smaller, specialized networks working in sequence. By organizing the reconstruction hierarchically, the team dramatically reduced the computational complexity, transforming an impossible problem into a solvable one.

The process works in stages: first, groups of projections from similar angles are processed by small neural networks to extract initial depth information. In intermediate stages, these partial results are combined and refined through additional network layers, gradually building more complete depth information. Finally, the last network layers assemble the complete image from the accumulated depth data.

Beyond pure reconstruction: AI for accelerating traditional methods

GE HealthCare’s innovation extends beyond pure deep learning reconstruction. The team has also developed AI methods to accelerate traditional iterative reconstruction. Given that iterative reconstruction can be prohibitively computationally expensive, the team created a leapfrogging approach where a neural network learns to predict the convergence behavior of iterative algorithms.

By observing how voxel values evolve during the first few iterations, the AI can predict what the values would be after many more iterations. In demonstrated examples, this reduced the number of required iterations from 40 to just 6.[1]

These novel approaches represent a different but equally important approach to sustainability: using machine learning not to replace traditional methods entirely, but to make them dramatically more efficient. The AI essentially saves computational resources in non-AI processes, demonstrating the versatility of machine learning in reducing energy consumption.

This exploration of sustainable AI in CT reconstruction represents just one facet of GE HealthCare’s comprehensive approach to Responsible AI. From testing federated learning techniques aimed at preserving patient privacy while enabling collaborative research, to explainable AI methods that help clinicians understand and trust AI recommendations, our commitment to developing AI that serves both people and planet continues to drive innovation.

[1] 2017 GE Internal Research